Executive Summary

AI systems are only as good as the data they learn from. Yet poor data quality remains a persistent barrier to AI success, with the potential to erode trust and slow AI adoption. To make AI reliable, data quality must be built into the foundation and not verified after the fact. By embedding proactive, automated quality frameworks, enterprises can ensure all data is accurate, complete, reconciled, and timely, forming the groundwork for trustworthy, scalable AI.

From Reactive to Proactive

Analysts estimate that data scientists spend up to 70% of their time cleaning and preparing data, leaving little capacity for innovation. According to analyst reports, enterprises implementing automated quality controls at source can accelerate AI deployment cycles by upto three times.

This marks a key shift from reactive data cleansing to proactive data assurance. In this model, quality isn’t a checkpoint; it’s a design principle. Where automation, reconciliation, and contextual validation converge to create self-correcting data ecosystems where reliable data is no longer a project milestone, but rather a continuous design principle.

Embedding Quality by Design

Across our modernization programs, we have observed that most data teams spend over half their time troubleshooting preventable quality issues. The answer to this challenge lies in proactively embedding data quality by design across the data lifecycle, right from ingestion and transformation to reconciliation and consumption.

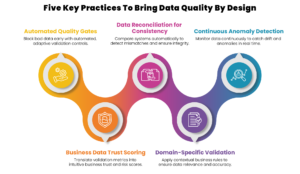

Let’s explore five key practices that bring “data quality by design”, to life:

- Automated Quality Gates

Integratequality gates at every ingestion and transformation point. Automated rules validate data for accuracy, completeness, timeliness, and conformity to block poor quality data before it flows downstream. Mature implementations can go a step further, adapting these gates dynamically based on data lineage or prior anomaly patterns. This prevents “bad data” from contaminating analytics and AI models. - Business Data Trust Scoring

Bridge the gap between technical metrics and business meaning by translating validation metrics into business-relevant trust scores. A trust score turns complex validation outcomes into a single, intuitive measure of reliability for business users. Executives can quickly assess whether data fuelling AI or analytics is “boardroom-ready” and quantify the risk of acting on flawed insights. This further helps tobuild enterprise confidence in the data powering AI and decision systems. - Data Reconciliation for Consistency

As data moves between systems,maintaining integrity is non-negotiable. Implement automated reconciliation to compare records between source and target systems, ensuring accuracy and completeness. This process detects mismatches, duplication, or loss across complex data flows, which becomes an essential for strengthening auditability and regulatory assurance, while minimizing manual effort. - Domain-Specific Validation

Generic quality checks can only go so far. True reliability comes from context-aware validation. Apply business logic that understands the domain: matching transaction rules in banking, claim hierarchies in insurance, or patient outcomes in healthcare.Apply these contextual, domain-based rules for precision and relevance. This ensures data isn’t just technically valid but that it’s meaningful, interpretable, and actionable for each business domain. - Continuous Anomaly Detection

Qualityisn’t static. Data changes with every new source or process. AI-powered monitoring can continuously detect anomalies such as sudden data drift, missing attributes, or out-of-range values in real time. This “always-on” layer of validation ensures that issues are addressed before they cascade into reports or models, keeping data ecosystems resilient and self-healing.

The Role of Data Stewardship

Automation delivers consistency, but you need data stewardship to ensure accountability. A structured stewardship process helps you define clear ownership for data domains, policies, and quality metrics. Stewards act as custodians and help in resolving exceptions, governing standards, and ensuring that automated systems align with business intent.

A mature stewardship model connects technology-driven quality controls with human oversight, creating a feedback loop that continuously improves trust and usability across the enterprise. Together, these practices and roles can form an intelligent data quality fabric, a system that learns, adapts, and dynamically corrects as your data evolves.

The Gains

Organizations that embed data quality, reconciliation, and stewardship into their architecture see tangible gains: fewer downstream errors, faster delivery, and measurable improvement in AI accuracy. Automated validation and reconciliation can cut engineering rework by up to 50%, while data stewardship ensures sustained governance and accountability. When data is trusted, AI outcomes become explainable, auditable, and reliable, to unlock executive confidence.

Where to Start

Data quality is a strategic enabler of AI readiness. Data leaders can start by identifying where quality checks, reconciliations, and stewardship activities occur today and shift them upstream. Combine automation with stewardship and continuous monitoring to create a self-healing data ecosystem leveraging the Azure, AWS, Databricks, Snowflake and more.

To begin with, accelerate your modernization journey with an Enterprise Data Strategy Assessment led by experts at OwlSure. Click here to learn how OwlSure modernizes enterprise data estates, unify enterprise-wide data and drive trusted intelligence at scale.

Authors:

Venkata Bhaskar, Data Architect

Renji Krishnan, Senior Product Marketing Manager