Executive Summary

AI has become the engine of innovation, yet trust remains its greatest bottleneck. Many enterprises have invested in AI pilots and data platforms but still face challenges of transparency, accountability, and ethical oversight. To scale AI responsibly, organizations must embed governance, lineage, and ethics into their architecture. Further they need to build a semantic layer, powered by retrieval-augmented generation (RAG) to ground AI reasoning in governed, auditable enterprise knowledge and unifies data meaning to ensure consistent, explainable, and trustworthy AI outcomes.

From Oversight to Intelligent Governance

The conversation around AI has evolved from innovation to accountability. Analysts report that nearly two-thirds of CDOs identify the lack of data governance as one of the top barriers to scaling AI responsibly, while organizations that automate governance and lineage experience over two times higher executive trust in AI outputs.

This evolution marks a move from manual oversight to intelligent governance, where automation, lineage, and ethical design converge into a unified “Trust Fabric”. Data leaders are no longer asking how to comply, but how to inspire confidence, thereby balancing innovation with integrity and evolving from compliance-driven programs to trust-driven enterprises.

Building a Trust Fabric for Scalable AI

Across our modernization and AI initiatives, we see a consistent pattern: technology succeeds when trust is designed in from the start. Governance, lineage, and ethics should not be external layers of the architecture but must become core architectural capabilities.

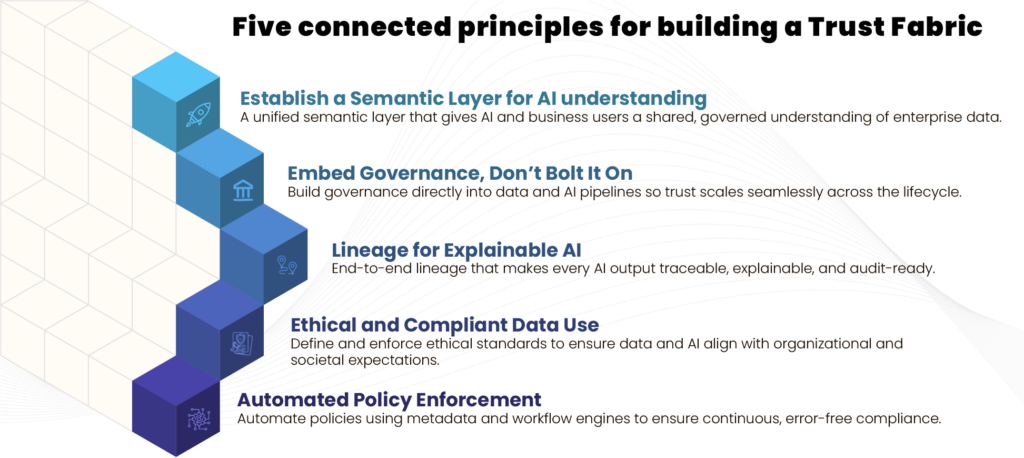

A practical path forward lies in five connected principles:

- Establish a Semantic Layer for AI understanding

Develop a unified semantic layer focused on business consumers, bridging data, AI models, and business meaning. This layer acts as the “language” through which both IT, business and AI (agents) can interpret data consistently. By defining shared metrics, business terms and relationships, the semantic layer reduces ambiguity, supports explainability, and strengthens governance. It enables AI systems to operate on trusted, context-rich data, enabling consistent decisions and transparency across the organization. With RAG, the semantic layer becomes an active context fabric, grounding AI reasoning in governed, traceable, and policy-compliant data. - Embed Governance, Don’t Bolt It On

Design governance within data pipelines and AI workflows from inception, not around them. Use automated controls and checks for access, consent, and retention that function quietly but consistently across the data lifecycle. Because when governance becomes seamless, it becomes easier to scale trust. - Lineage for Explainable AI

Capture lineage at every transformation and make it visible through intuitive tools. End-to-end traceability helps data teams explain decisions, prove accountability, and demonstrate audit readiness. In RAG-enabled architectures, lineage links AI outputs to their underlying data sources, creating transparent, traceable, and explainable AI reasoning. Together, these measures can form the foundation for executive and regulatory confidence.

- Ethical and Compliant Data Use

Define ethical standards for data collection, model fairness, and consent management, and review them continually. Embedding ethics as an evolving framework ensures AI aligns with both organizational values, legal and emerging societal expectations.

- Automated Policy Enforcement

Deploy metadata-driven rules, policy engines and workflow automation to enforce governance dynamically. This minimizes manual intervention, reduces error, and ensures continuous compliance even as data, models, and regulations change.

Together, these principles can form a governance-by-design architecture where transparency, accountability, and ethics are orchestrated continuously rather than applied reactively.

The Impact of Trust

Enterprises that embed this trust fabric early will not only deploy AI faster but deploy it responsibly, to earn the confidence of regulators, executives, and customers alike, additionally improving adoption. With platforms like Azure and Databricks, automated governance and lineage become part of the enterprise fabric, to reduce audit cycles, accelerate model approvals, and eliminate uncertainty in AI-driven outcomes.

Moving Forward

AI will only scale as far as trust allows it. And building trust at scale starts with embedding accountability and transparency into your data foundation. Now is the time for data leaders to move from reactive compliance to proactive governance. Begin by assessing where governance and lineage reside in your current architecture. Then evolve toward governance by design, so that policies, data, and models coexist within a transparent, ethical, and auditable ecosystem.

To begin with, get on your modernization journey with an Enterprise Data Strategy Assessment led by experts at OwlSure Click here to learn how OwlSure modernizes enterprise data estates, unify enterprise-wide data, and drive trusted intelligence at scale.

Authors:

Venkata Bhaskar, Data Architect

Renji Krishnan, Senior Product Marketing Manager